Click here to download the full paper (PDF)

Authored By: Mr. Makrand Chauhan (LL.M), & Co-Authored By: Ms. Ifrah Khan (LL.M), O.P. Jindal Global University, Jindal Global Law School, Sonipat, Haryana,

Click here for Copyright Policy.

INTRODUCTION:

“An autonomous (or a driverless) vehicle is one that is able to run on its own and perform routine functions without any external human intervention. Its ability to do so emanates from its specific technological edge to make sense of its surroundings and utilize a fully automated driving system which allows these vehicles to respond to external conditions which till now only a human driver could manage. However, most fully autonomous and connected vehicles are essentially products which combine the troika of hardware, software and other related service components. Where the advantage of having such vehicles is arguably their safety owing to the elimination of human error, these systems ultimately work through computer machinery which is not flawless and is highly prone to errors of technical kind from time to time. The paper strives to seek the answer to the conundrum of who should be liable when an accident is caused in autonomous mode and is also a brief exploration of the legal issues that could be involved behind the liability for accidents caused by such vehicles.

In examining that issue, this paper first discusses what autonomous driving is, its various types and the challenges which these vehicles currently face. Then, the paper talks about liability issues that inevitably attach to the accidents that may occur when AVs ply on roads through the examination of products’ liability, especially through the lens of US jurisdiction. The penultimate section would elaborate upon the ethical scenario behind the decision making that should go into the algorithmic structure of AVs so that accidents on the roads and highways could be minimized, if not avoided. Finally, the concluding paragraph speculates a long-term solution for the drivers interested in autonomous vehicles.”

I. THE CONCEPT OF AUTONOMOUS DRIVING:

One of the most celebrated subjects of discussion in today’s media and, occasionally, an emotionally triggering one, the idea of autonomous driving has finally escaped the confines of our imaginations and found its way onto our roadways.[1] Major automobile companies in the world traditionally invested in developing high-tech transportation systems like Nissan, Toyota, Lexus, Hyundai, Uber etc. have already started developing autonomous vehicles (AVs). Google, a tech giant which created the most widely used search engine finds this idea so important that it has already declared it one of their five most important business projects.[2] Given the right market conditions, AVs have the potential to radically transform the transportation scene in near future by their sheer capacity to manoeuvre themselves on current road and highway systems in varying environmental contexts with practically no direct human input.[3] In fact, Chris Urmson, Director at Google Self-Driving Car project had already announced in one of his articles in 2014[4] that the company was keen on embarking upon this journey through continuous learning and progressive adaption while at the same time not losing its focus on safety. As per his vision, not only would it be a crucial step towards improvement of road safety in general, but also transform mobility for many citizens hitherto incapable of doing so.

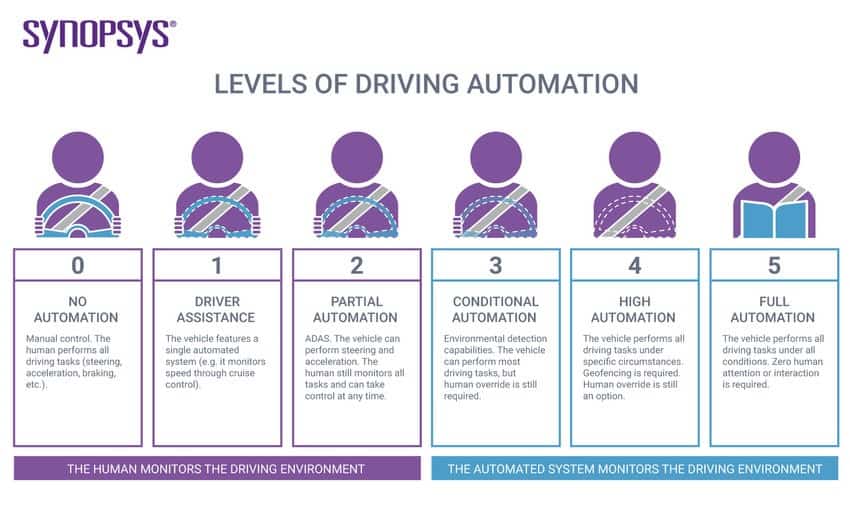

The Society of Automotive Engineers (SAE) conveniently differentiates between the use of the terms, ‘Autonomous’, ‘Automated’ and ‘Self-Driving’ for such types of vehicles. At the lexicological level, it prefers the use of the term ‘automated’ over ‘autonomous. This is so because it is believed that where a fully automated car could be expected to follow orders and then wheel itself away, a fully ‘autonomous’ one would be self-conscious and capable of making its own decisions in the manner independent of the owner/driver. Furthermore, SAE expounds 6 levels of driving automation ranging from 0 (fully manual) to 5 (fully autonomous), conveniently adopted by the U.S. Department of Transportation.[5]

For the first three levels, humans are largely responsible for monitoring the driving. As the levels increase, an advanced system of sophisticated software is supposed to assist humans in their driving. While Level 0 is represented by most vehicles on roads today which despite having high-tech gadgets incorporated into them can largely be said to be ‘manually-controlled’; the final i.e., Level 5 suggests that utopian scenario in which vehicles would not even remotely require any human intervention.

II. CHALLENGES FACED BY AUTONOMOUS VEHICLES:

II.I Safety:

According to a survey released by the U.S. Department of Transportation, autonomous vehicles have the capacity to dramatically reduce crashes occurring on roads due to human error.[6] However, given the kind of many unforeseen and chaotic scenarios, even the most technologically advanced countries in the world won’t be able to introduce AVs in countries across the globe. No matter what, the highest and the most important norm that needs to be taken into consideration is the safety of the passengers as well as those on the roads in front of them. Holding the manufacturer liable for accidents caused by such vehicles is perhaps the most convenient way for the courts across the jurisdiction to ensure safety.

II.II Efficiency:

Autonomous driving systems currently in development are incorporating cruise control which other than contributing in gaining of travel time are also touted to contribute to fuel savings through a smoother traffic flow.[7] Other than making the system of commuting safer, AV technology can assist in harmonizing the flow of traffic by precisely controlling individual automobiles through anticipation and inter-vehicle collaboration.[8] However, this revolutionary idea is likely to pose a direct challenge to the idea of privacy and autonomy. Thus, it is suggested that any plan for developing efficient AV systems that need to be implemented must be proceeded with sufficient caution and take these and other similar factors into account.

II.III Mobility:

When, in 2015, reasons for crashes were investigated, it was found that human drivers contributed to nearly 94% of all traffic accidents.[9] Additionally, another survey revealed that such traffic accidents were the single biggest reason for the death of people between the age group of 15 to 29 years across the globe.[10] For the sake of a safer driving experience, the technology behind autonomous driving could really assist people in their transition from a novice driver to an experienced one with a lower tendency of committing accidents. However, the challenge one could encounter here would be a fundamental change in consumer behaviour viz., exchange of vehicle ownership for sharing or pooling; which, in turn, could trigger the transportation companies to design their future autonomous fleets based on artificial intelligence. This might need further unexpected intervention from a panel of AI technologists which would include developers, scientists and engineers.

III. THE ISSUE OF LIABILITY:

AVs have been an active area of research for many decades. While the perception of the environment remains the biggest challenge, the liability issue is also nothing short of a subject to be taken due cognizance of. From a purely technological point of view, an autonomous vehicle is largely loaded with stuff which can now be increasingly seen in high-end electronic devices like iPads, iPhones etc. However, the lack of sophisticated technological gears can prove to be a very dangerous proposition for the driver of an autonomous vehicle.

III.I Product Liability Regime:

Since the car manufacturer should be legally responsible when a defect or error in its computer program causes an accident, society will need to decide how to impose financial responsibility on the manufacturer. In the case of AVs, it could be with respect to the program, sensors, cameras, GPS, and whatever other technology that can be brought into play. This liability can be imposed on the manufacturer because of the harm that was caused by using the product. Within the product liability, there is a possibility of three main principal liability doctrines that are available to the litigants who feel the need to sue product manufacturers:

III.I.I Manufacturing Defect:

A manufacturing defect is one where the manufacturer fails to comply with certain norms and standards set for the body of an autonomous vehicle and as a result of it, harm is caused. For instance, if the brake or the clutch system of an autonomous vehicle is not working according to a user’s expectation, then it might be because of the fact that somewhere a screw might have been left loose on that vehicle.[11] This can surely be referred to as manufacturing defect. In such cases, manufacturer can be held strictly liable. According to Kevin Funkhouser, even though defects of such kind are increasingly becoming less common with the advancement in technology, they may still occur every now and then, due to sheer volume of production.[12]

III.I.II Design Defect:

Design Defect is one where we designed the product in such a way that knowing clearly that there is a foreseeable risk of harm, we pretty much went ahead and designed the product in such a way that it could cause harm. Basically, it assumes that there is possibly an alternative that the manufacturer could have adopted which could have reduced the foreseeable harm but instead corners were cut and it was decided to not take that approach in designing that vehicle. This can get a bit arduous to handle as it can prove to be an expensive affair. The customer involved in a crash might have to prove that there is a defect in the design and one can only do that by bringing in experts to provide evidence that there was a design defect. A plaintiff who decides to assert a design defect would show the existence of a ‘defect’ under the relevant law applicable in the particular state. Also, it becomes exigent to truly show that there is a viable alternative which could have reduced the defect and thus, could have primarily reduced any overall possibility of any kind of mishap from happening.

III.I.III Warning Defect:

There is another plausibility where if companies come up with AVs in the market, there might be certain inherent weaknesses or problems for car manufacturers to actually point out that regardless of autopilot, one might have to intervene in certain kind of scenarios. Simply putting, AVs could also be realized to be faulty for their lack of presence of appropriate warnings. A warning defect is, thus, said to occur when a manufacturer fails to inform purchasers of hidden dangers or fails to inform consumers how to safely use its products.[13] In fact, Section 2(c) of (Third) Restatement of Products Liability defines a defect for ‘failure to warn’ in the following legal phrase:

“A product…is defective because of inadequate instructions or warnings when the foreseeable risks of harm posed by the product could have been reduced or avoided by the provision of reasonable instructions or warnings …and the omission of the instructions or warnings renders the product not reasonably safe.”[14]

Unfortunately, neither is there any consensus on an ideal determination of the conditions in which warnings may be required nor is there any unequivocal test as to when the existing warnings could be sufficient enough. Also, some jurisdictions express hesitation in recognizing the malfunction doctrine behind this kind of defect. In the case of Cotton vs. Buckeye Gas Products Co., the court expounded that in those cases where the plaintiff claimed a ‘failure to warn’ on part of the manufacturer, it was realized that the cost that had to be paid was primarily in terms of the time and effort that went into grasping the message of warning attached therewith rather than the cumulative set of warnings that keyed to a particular accident.[15]

IV. ETHICAL DECISION-MAKING IN AUTONOMOUS VEHICLES:

Presently, when the world is hit with all possible technological revolutions, a lot of consideration has been given to the ethics of AVs. The areas that have been specifically talked about and tried to be dealt with are the decisional approaches in accident circumstances in which human damage is an imaginable result. Beginning from the presumption that human damage is not absolutely avoidable, numerous authors have tried to opine different records of what ethical quality needs to be in these circumstances. The results which can be thought about and proposed are a methodology for AV Ethical Decision-making and the Ethical Valence Theory.[16] The Ethical Valence Theory portrays the autonomous vehicle decision-making as a sort of claim mitigation, that is to say that distinct road users hold distinct moral claims on the vehicle’s conduct, and the vehicle should alleviate these claims as it decides about its surrounding environment.[17] Taking the AVs into consideration, the harm done by any activity and the vulnerabilities associated with it are evaluated and estimated through prudence, culminating into a moral execution, rationalized with reality. The objective of this methodology is not to determine how moral hypothesis expects the vehicles to act, but instead to administer a computational methodology that is sufficiently flexible to oblige various ‘moral positions’ that involve the consideration of what morality demands and what the road users may expect.[18] It thus offers an assessment device for the social worthiness of AV’s moral decision-making.

IV.I Concerns of Algorithmic Ethical Decision-making in autonomous vehicles:

Ethics as a conception is innate in different driving situations as it implies designating chances of risk among people during mishaps and routine driving situations, like choosing the required distance from an adjacent vehicle.[19] Since, AVs result in consequential risks, appropriation decisions will be judged by transit regulations, traffic laws as well as ethical regulations and guidelines.[20] The researchers thus feature the requirement for AVs to pursue ethical standards, which are alluded extensively to ethical theories, standards, principles, and norms while decision-making, which can be assigned and planned in autonomous vehicles utilizing numerous proposed approaches.[21] The proposition to assign ethical guidelines for autonomous vehicles have spun around the utilization of moral predicaments in psychological studies known as ethical dilemmas.[22] An ethical dilemma may be referred to a circumstance where it is ‘difficult to choose among different potential choices without abrogating one good standard.’[23] A famous psychological test is the trolley issue that can be delineated through a speculative situation where AVs brakes are flawed and can either proceed in their present way to collide with five walkers or on the other hand, turn to collide with one walker.[24] While these trolley issues can uncover peoples’ moral inclinations and key decision-making rules, they make unreasonable suppositions about driving situations, such as expecting that results are sure and that the passenger can determine how the damage is dispersed.[25]

They are thus helpless to irregularities in moral thinking among its members and do not consider the impacts of collecting decisions that are morally legitimate all alone yet that possibly build bigger foundational designs, for example, discrimination.[26] Different suppositions incorporate the presence of only one decision-maker, the promptness of the decisions, and the limitation of contemplations.[27]

There are two expansive specialized methodologies apart from the psychological studies and experiments that have been proposed to program the ethical regulations into autonomous vehicles’ algorithms. This hierarchical approach includes planning a bunch of ethical hypotheses, like utilitarianism and deontology, to computational necessities and programming them into the algorithm, yet programming each moral hypothesis involves their arrangement of restrictions and issues that can sabotage autonomous vehicle wellbeing and sustain segregation.[28] The ethical theory like Utilitarianism accentuates the ethical quality of results, and with regard to AVs, suggests the programming of algorithms to limit the damage from mishaps.[29] But, while this might look good in theory, in execution, it can advance bias and execution challenges which may give rise to new dangers and potential segregation. Utilitarian calculations would figure every single imaginable result, elective activities, and their related results, and limit expense work.[30]

Nonetheless, the theory of utilitarianism so fitted into the algorithm comes with its own perks. There appear to be enhancement issues that limit the aggregate as opposed to singular damages. It can be observed that utilitarian algorithms do not think about value or reasonableness and may indeed utilize improper qualities as decision-making standards, prompting one-sided hazardous assignment choices.[31] For example, the algorithm may pick activities that distribute more dangers to ‘more ensured’ street users, like, those who wear helmet while driving, as they could be expected to endure minimal wounds when compared with those who are ‘less secured’[32].

It could advance “bogus motivations”, like not wearing a helmet on a bike, which would victimize the individuals who played it safe.[33] Moreover, dangers can rise out of the specialized difficulties of executing utilitarian morals, for example, likely errors and deferrals in machine discernment that sabotage the autonomous vehicle’s capacity to process every single imaginable result, activities in a short period and the difficulties of characterizing the algorithm’s decision-making benchmark.[34] Inducing the theory of deontology in the algorithm of AVs, it majorly stresses activities being spurred by regard and respect for humanity in general, yet in doing so there occurs other difficulties which may give rise to unknown safety dangers for these vehicles. The deontological regulations make the thinking behind the algorithm’s decision-making unequivocal.[35] The best example which can be referred to, is, “Asimov’s Three Laws of Robotics”.[36] The algorithm could be designed in such a way that it is forced to settle with imperfect choices just stringently maintain its standards.

For example, briefing the autonomous vehicle to halt when rules differ or cannot be adhered to. This generates dangers for other street users and blocks the autonomous vehicle’s adaptability to new conditions, not at all like utilitarian-situated algorithms that can without much of a stretch change the probabilities and degree of results to upgrade the choices made.[37] Also, the deontological regulations might not cover a wide range of driving situations.[38]

Moreover, numerous deontological standards are implanted in legitimate ambiguities of existing transit regulations that cannot expressly be addressed in algorithms, for example, the various meanings of “barricade” or “secure” in various situations.[39] When we take the individual limits of utilitarianism and deontological theories into consideration, the researchers opine to combine both the speculations to expand the AV’s context of the circumstance prior to making a decision.

Such a combination may seem to function admirably. The best example of such a scenario can be that in numerous organ donation programs, deontological morals legitimize the first come first serve principle while utilitarian morals legitimize the act of focusing on the most debilitated beneficiaries.[40]

IV.II Ethical Valence Theory:

Ethical Valence Theory is best perceived as a type of moral claim mitigation.[41] ‘The philosophy of this theory in itself revolves around moral claim mitigation’.[42] The principal supposition is that each vehicle user in the surrounding environment holds a specific claim on his vehicle’s conduct, relying on proper decision making for the same. In nutshell, it can be said that each person, from walker to traveller, has a specific assumption regarding how the vehicle will treat the person in question in its consultation, which is further supported by realities about the welfare of an individual.[43]

Theoretically, the Ethical Valence Theory portrays autonomous vehicles as a type of environmental entity, whose organization is straightforwardly motivated by the claims of the surrounding environment.[44] These claims may range in vitality, for example, the claim of a person on foot is more grounded than the claim of a passenger in a vehicle if they are seriously injured in an accident caused by the AV.[45] The objective of the AV is to fulfil to the maximum as many claims as could be prudent, moving in an environment reacting with respect to the strength of each claim.[46]

When the algorithms in the autonomous vehicles are coded with such theories of utilitarianism, deontology, and principle of the EVT, the functioning of AV’s would result in better ethical decision-making thereby reducing the number of accidents that may occur. It shall further lead the technology of AI to a certain level where human inputs may not be required, further adding to the technological revolution.

V.CONCLUSION:

In the case of fully automated vehicles, where they are supposed to be performing all the driving tasks under varying conditions and the occupant behind the steering wheel would simply be required to pass instructions to let the vehicle know which and what distance to traverse, a shared responsibility framework can be proposed. In that, can be realized by all the members and stakeholders that the burden of a complex piece of technology must be shared by a ‘responsibility network’ consisting of programmers and manufacturers, who have the responsibility in terms of building and integrating the different components in such a way that there is a seamless flow of information into the controlling system of the vehicle and the engineers and operators (drivers), who work on the algorithm, on the interface between the hardware and software that enables the machine to make those calls.

Looking from a larger perspective, we need to be careful about the fact that there can be machines which are not full-proof and they do need to undergo certain kind of transformations from time to time. Glitches of either mechanical or software kind may occur. We need to have a human with at least some basic level of control where the safety can be maximized when something goes wrong and that’s where a shared responsibility network or framework comes into picture.

Finally, an information relay which could be going back and forth between the controlling system in the vehicle and the servers situated somewhere else with due assistance from complex communication systems can be seriously considered as a resolution for quotidian decision-making issues of the user behind the vehicle.

Footnotes:

[1] Vikram Bhargava and Tae Wan Kim, Autonomous Vehicles and Moral Uncertainty in Patrick Lin, Keith Abney, and Ryan Jenkins (eds), Robot Ethics 2.0: From Autonomous Cars to Artificial Intelligence (Oct 2017)

[2] Chris Urmson, Just Press Go: Designing a Self-driving Vehicle, Google Official Blog, May 27, 2014. <http://googleblog.blogspot.com/2014/05/just-press-go-designing-self-driving.html>

[3] Daniel J. Fagnant and Kara M. Kockelman, Preparing a Nation for Autonomous Vehicles: Opportunities, Barriers and Policy Recommendations. Washington, DC: Eno Center for Transportation. (2013)

[4] ibid

[5] The 6 Levels of Vehicle Autonomy Explained <https://www.synopsys.com/ automotive/ autonomous- driving -levels.html>

[6] National Highway Traffic Safety Administration (2008). National Motor Vehicle Crash Causation Survey. U.S. Department of Transportation, Report DOT HS 811 059

[7] ibid

[8] Sven A Beiker, Legal Aspects of Autonomous Driving, 52 Santa Clara L Rev 1145 (2012)

[9] Santokh Singh, Critical reasons for crashes investigated in the National Motor Vehicle Crash Causation Survey (Traffic Safety Facts Crash•Stats. Report No. DOT HS 812 115). Washington, DC: National Highway Traffic Safety Administration (February 2015)

[10] Jair Ribeiro, How Autonomous Vehicles will redefine the concept of mobility (4 Sept, 2020)

[11] Jeffrey R. Zohn, WHEN ROBOTS ATTACK: HOW SHOULD THE LAW HANDLE SELF-DRIVING CARS THAT CAUSE DAMAGES

[12] Kevin Funkhouser, Paving the Road Ahead: Autonomous Vehicles, Products Liability, and the Need for a New Approach UTAH L. REV. 437, 453 (2013)

[13] Nidhi Kalra, James Anderson & Martin Wachs, Liability and Regulation of Autonomous Vehicle Technologies, UCB-ITS-PRR-2009-28, ISSN 1055-1425, (April 2009)

[14] Products liability by Tiffany Funk: Restatement (Third) of Torts: Products Liability

[15] Cotton v. Buckeye Gas Products Co., 840 F.2d 937–939, 1988

[16] Katherine Evans, Nelson de Moura, et al., Ethical Decision Making in Autonomous Vehicles: The AV Ethics Project, Springer 3285, 2020

[17] Katherine Evans, Nelson de Moura, et al, n-16.

[18] Katherine Evans, Nelson de Moura, et al, n-16.

[19] Noah J Goodall, From trolleys to risk: Models for ethical autonomous driving (American Journal of Public Health 107) (2017)

[20] J Christian Gerdes and Sarah M Thornton, Implementable ethics for autonomous vehicles. In Autonomous Driving: Technical, Legal and Social Aspect, Springer 88 (2016)

[21] Hazel Si Min Lim and Araz Taeihagh, Algorithmic Decision-Making in AVs: Understanding Ethical and Technical Concerns for Smart Cities, Sustainability 1 (2019)

[22] Hübner Dietmar and Lucie White, Crash algorithms for autonomous cars: How the trolley problem can move us beyond harm minimisation, Ethical Theory and Moral Practice 685. (2018)

[23] Vincent Bonnemains, Claire Saurel and Catherine Tessier, Embedded Ethics: Some technical and ethical challenges, Ethics and Information Technology 41 (2018)

[24] Hazel Si Min Lim and Araz Taeihagh, n-20.

[25] Lin Patrick, Why ethics matters for autonomous cars, Springer 69 (2016)

[26] Vincent Bonnemains, Claire Saurel and Catherine Tessier, n-22.

[27] Hazel Si Min Lim and Araz Taeihagh, n-20.

[28] Katherine Evans, Nelson de Moura, Stephane Chauvier, Raja Chatila and Ebru Dogan, n-1.

[29] Noah J Goodall, n-4.

[30] Noah J Goodall, n-18.

[31] Hazel Si Min Lim and Araz Taeihagh, n-6.

[32] Vincent Bonnemains, Claire Saurel and Catherine Tessier, n-22.

[33] Lin Patrick, n-24.

[34] Liu Hin Yan, Three types of structural discrimination introduced by autonomous vehicles, UC Davis L. Rev. Online 51 (2017)

[35] Tzafestas G Spyros, Roboethics: Fundamental concepts and future prospects, Information 148, (2018)

[36] Ronald Leenas and Federica Lucivero, Laws on robots, laws by robots, laws in robots: regulating robot behaviour by design, Law, Innovation and Technology 193, (2014)

[37] Ronald Leenas and Federica Lucivero, n-35.

[38] Ronald Leenas and Federica Lucivero, n-35.

[39] Ronald Leenas and Federica Lucivero, n-35.

[40] Hazel Si Min Lim and Araz Taeihagh, n-6.

[41] Katherine Evans, Nelson de Moura, Stephane Chauvier, Raja Chatila and Ebru Dogan, n-1.

[42] Katherine Evans, Nelson de Moura, Stephane Chauvier, Raja Chatila and Ebru Dogan, n-1.

[43] Katherine Evans, Nelson de Moura, Stephane Chauvier, Raja Chatila and Ebru Dogan, n-1.

[44] Gibson James and Laurence E Crooks, ‘A theoretical field-analysis of automobile-driving’ [1938] The American Journal of Psychology 453.

[45] Katherine Evans, Nelson de Moura, Stephane Chauvier, Raja Chatila and Ebru Dogan, n-1.

[46] Katherine Evans, Nelson de Moura, Stephane Chauvier, Raja Chatila and Ebru Dogan, n-1.

Cite this article as:

Mr. Makrand Chauhan & Ms. Ifrah Khan, Liability for Accidents By Autonomous Vehicles, Vol.3 & Issue 1, Law Audience Journal, Pages 240 to 252 (30th June 2021), available at https://www.lawaudience.com/liability-for-accidents-by-autonomous-vehicles/.